I had the chance to talk to Nick Fox and Diane Tang from Google’s Ad Quality team about quality scoring and how it impacts the user experience. Excerpts from the article along with additional commentary will be in Friday’s Just Behave column, but here is the full transcript.

Gord: What I wanted to talk about a little bit was just how the quality, particularly in the top sponsored ads, impacts user experience and talk a little about relevancy. Just to set the stage, one of the things I talked about at SES in Toronto was just the fact that as far as a Canadian user goes, because the Canadian Ad market isn’t as mature as the American one, we’re not seeing the same acceptance of those sponsored ads at the top. Just because you’re not seeing the brands that you would expect to see for a lot of the queries. You’re not seeing a lot of trusted vendors in that space. They just have not adopted search the same way they have in the States. What we’ve seen in some of our user studies is a greater tendency to avoid that real estate … or at least to quickly scan it and then move down. So, that’s the angle I really want to take here. Just how important it is ad quality and ad relevance to impacting that user experience and then also talking about one thing I’ve always noticed in the number of our user studies. Of all the engines, Google seems to be the most stringent on what it takes to be a qualified ad. To get promoted from the right rails to the top sponsored ads. So that sets a broad framework of what I wanted to talk about today.

Nick: Let me give you a quick overview of who I am and who Diane is and what we work on and then we’ll jump into the topics that you’ve raised. So what Diane and I work on is called Ad Quality and it is essentially everything about how we decide which ads to show on Google and our partners and what they should look like, how much we charge for them and all those types of things. How the auction works…everything from soup to nuts. If you ask us what our goal is…our goals is to make sure our users love our ads. If you ask Larry Page what our goal is…it’s to make our ads as good as our search results. So it’s a heavy focus on making sure that our users are happy and that our users are getting back what they want out of our ads. We sort of think of ourselves as among the first that work on the average product for Google. We represent the user, to make sure the user is getting what they really need. We’re very similar to what we do on the search quality side, making sure that search results are very good.

I think a lot of the things you’ve picked up on are very accurate. In terms of the focus on top ad quality..in general, the focus on quality..I think what you picked up on in your various reports as well as the study in Canada are pretty accurate and pretty much what drives what we are working on here. The big concern that I would have, the main motivation for why I think ad quality is important is as a company we need to make sure users continue to trust our ads. If users don’t trust our ads, they will stop looking at the ads, and once they stop looking at the ads they’ll stop clicking on the ads and all is lost. So what we need to make sure we are doing in long run is that the users believe that the ads will provide them what they are looking for and they will continue looking at the ads as valuable real estate and to continue to trust that.

So that is what we are going for. I think as we look at the competitors landscape as well, we see a lot of what you see. We certainly have historically, and continue to do so, have much more of a focus on the quality of the ads. Making sure we’re not doing things where we trade off the user experience against revenue. We all have the ability to show more ads or worse ads, but we take a very stringent approach, as you’ve noticed, to making sure we only show the best ads that we believe the user will actually get something out of. If the user’s not going to get something out of the ad, we don’t show the ad. Otherwise the user is going to be less likely to consider ads in the future.

Diane: It’s worth pointing out that basically what we’re saying is that we are taking a very long term view towards making sure our users are happy with our ads and it’s really about making them trust what we give them.

Gord: One thing I’ve noticed in all my conversations whether they’re with Marissa or Matt or you, the first thing that everyone always says at Google is the focus around the user experience. The fact that the user needs to walk away satisfied with their experience. When we’re talking about the search results page, that focuses very specifically on what we’ve called in our reports the “area of greatest promise”. That upper left orientation on the search results page and making sure that whatever is appearing in that area had better be the most relevant result possible for the user. In conversations with other engines I hear things like balanced ecosystems and communities that include both users and advertisers. I’ve always been struck by the focus at Google and I’ve always been a strong believer that corporations need sacred cows, these untouchable driving principles that everyone can rally around. Is that what we’re talking about here with Google?

Nick: I think it is. I think it comes from the top and it comes from the roots. If we were doing a proposal to Larry and Sergey and Eric where we’re saying, “Hey, let’s show a bunch of low quality ads” the first question they’re going to ask is “Is this the right thing for the user?” And if the answer is no, we get kicked out of the room and that’s the end of conversation. So you get that from the top and it permeates all the way through. You hear it when you speak to Marissa and Matt and us. It permeates the conversations we have here as well. It’s not just external when we talk about the user; it’s what the conversation is internally as well. It just exudes through the company because it’s just part of what we think. I wouldn’t say that there isn’t a focus on the advertiser too, it’s just that our belief is that the way you get that balance is by focusing on the user, and as long as the user’s happy, the user’s clicking on the ad, and as long as the user’s clicking on the ad, the advertiser’s getting leads and everything works. If you focus on the advertiser’s in the short term, maybe the advertisers will be happy in the short term, but in the long term that doesn’t work. That used to be a hard message to get across. It used to be the case that advertiser’s didn’t really get that. And one of the most rewarding things for me is that the advertisers see that, they get that. Some of the stuff we do in the world of ad quality is frustrating to advertisers because in some cases we’re preventing their ads from running in cases where they’d like it to run. We’ve seen that the advertiser community is actually more receptive to that recently because they understand why we’re doing it and they understand that in the long term, they’re benefiting from it as well. I think that you are seeing that there is a difference in approach between us and our competitors. That we believe the ecosystem thrives if you focus on the users first.

Gord: I’d like to focus on what, to me, what’s a pretty significant performance delta between right rail and top sponsored. We’ve seen the scan patterns put top sponsored directly in the primary scanning path of users where right rail is more of a side bar that may be considered after the primary results are scanned. With whatever you can share, can you tell me a little about what’s behind that promotion from right rail to top sponsored?

Nick: Yes, it’s based on two things. One is the primary element is the quality of the ad. The highest quality ads get shown on the top. The lower quality ads get shown on the right hand side. We block off the top ads from the top of the auction, if you really believe those are truly excellent ads…

Diane: It’s worth pointing out that we never break auction order…

Nick: One of the things that’s sacred here is making sure that the advertiser’s have the incentive. In an auction, you want to make sure that the folks who win the auction are the ones who actually did win the auction. You can’t give the prize away to the person who didn’t win the auction. The primary element in that function is the quality of the ad. Another element of function is what the advertiser’s going to pay for that ad. Which, in some ways, is also a measure of quality. We’ve seen that in most cases, where the advertiser’s willing to pay more, it’s more of a commercial topic. The query itself is more commercial, therefore users are more likely to be interested in ads. So we typically see that queries that have high revenue ads, ads that are likely to generate a lot of revenue for Google are also the queries where the ads are also most relevant to the user, so the user is more likely to be happy as well. So it’s those two factors that go into it. But it is a very high threshold. I don’t’ want to get into specific numbers, but the fraction of queries that actually show these promoted ads is very small.

Gord: One thing we’ve noticed is, actually in an eye tracking study we did on Google China, there where the search market is far less mature, you very, very seldom see those ads being promoted to top sponsored. So I would imagine that that’s got to be a factor. Is the same threshold applied across all the markets or does it vary, does the quality threshold vary from market to market?

Nick: I don’t want to get too much into the specifics of that kind of detail. We do certainly take an approach in market that we believe is most effective for that market. Handling everything at a global level doesn’t really make a lot of sense because in some cases you have micro markets that, or, in the case of China, a large market, where it makes sense to tailor our approach to what makes sense for that market…what users from that market are looking for, what the maturity of that market is. A market that has a different level of search quality, for example, it might make sense to take a different approach in how we think about ads as well. So that’s what I want to say there. But you’re right, in a market like China that’s less mature and at the early stage of it’s development, you do see fewer ads at the top of the page, there are just fewer ads there that we believe are good enough to show at the top of the page. Contrast that with a country like the U.S. or the U.K., where these markets are very mature and have the high quality ads we feel comfortable showing at the top, we show top ads.

Diane: But market maturity is just one area we look at. There’s also user sophistication with the internet and other key factors. We have to take all this into account to really decide what the approach is on a market basis.

Gord: One of the questions that always comes up every time I sit on a panel that has anything to do with quality scoring is what’s in an ad that might generate a click through is not necessarily what will generate a quality visitor when you carry it forward into conversion. For instance you can entice someone to click through but they may not convert and, of course, if you’re enticing them to click through you’re going to benefit from the quality scoring algorithm. How do we correct that in the future?

Nick: I think there are two things. One is, in general, an ad’s that’s being honest, and gets a high click rate from being honest, is essentially a very relevant ad and therefore gets a high click through rate. We’ll typically see that that ad also has a high conversion rate. In cases where the advertiser’s not being dishonest, the high click through rate is generally correlated with a high conversion rate. And it’s simply because that ad is more relevant, it’s more relevant in terms of getting the user to click on that ad in the first place, it’s also more relevant in delivering what that user is looking for once they actually got to the landing page. So you see a good correlation there.

There are cases where advertisers can do things where they’re misleading in their ad text and create an incentive for a user to click on their ad and then not be able to deliver, so the advertiser could say “great deals on iPods” and then they sell iPod cases or something. In that case, the high click through rate is unlikely to be correlated with a high conversion rate because the users are going to be disappointed when they actually end up on the page. The good thing for us is that the conversion rate typically gets reflected in the amount that the advertiser’s actually willing to pay, so that’s one of the reasons why the advertiser’s bid is a relatively decent metric of the quality, for example in this ipod cases case, because that conversion rates likely to be low, the advertiser’s not likely to bid as much for that. The click just isn’t worth as much to them, therefore they’ll bid less and end up getting a lower rank as a result of that. So, in many cases, this doesn’t end up being a problem because that just sort of falls out of the ranking formula. It’s a little bit convoluted.

Gord: Just to restate it to make sure I’ve got it here. You’re saying that if somebody is being dishonest, ultimately the return they’re getting on that will dictate that they have to drop their bid amount, so it will correct itself. If they’re not getting the returns on the back end, they’re not going to pay the same on the front end and ultimately it will just find it will just find it’s proper place.

Nick: What an advertiser should probably be thinking most about is mostly ROI per click…it’s actually ROI per impression. From the ad that’s likely to generate the most value for the user, and therefore the most value to Google as well as the most value to the advertiser, all aligned in a very nice way, is the ad that’s the most likely to generate the most ROI per impression. And because of our ranking formula, those are the ads that are most likely to show up at the top of the auction. And the ones that aren’t fall out. So the advertiser should care click through rate, but they shouldn’t care about click through rate exclusively to the extent that that results in a low conversion rate and a low ROI per click for them.

Gord: We talked a little bit about ads being promoted to the top sponsored and over the past three or four years, you have experimented a little bit with the number of ads that you show up there. When we did our first eye tracking study, usually we didn’t see any more than two ads, and that increased to three shortly after. Have you found the right balance with what appears above organic results as far as sponsored results?

Diane: I would say that it’s one of those things where the user base is

constantly shifting, the market is constantly shifting. It’s something that we definitely reevaluate frequently. It was definitely a very thought through decision to move to three, and we show three actually very rarely. We seriously consider that when we show three, is it in the best interest for the user? There’s a lot of evaluation of the entire page at that point and not even just the ads, whether or not it was the right thing. We’re very careful to make sure that we’re constantly at the right balance. It’s definitely something that we look at.

Gord: One of the things we’ve noticed in our eye tracking studies is that there’s a tendency on the part of users to “break off” results in consideration sets and the magic number seems to be around four, so what we’ve seen is even if they’re open to looking at sponsored ads, they want to include at least the number one organic result as well, as kind of a baseline for reference. They want to be able to flip back and forth and say, “Okay, that’s the organic result, that how relevant I feel that is. If one of the sponsored ads is more relevant, than fine, I’ll click on it.” It seems like that’s a good number for the user to be able to concentrate on at one time, quickly and then make their decision based on that consideration set that would usually include one or two sponsored ads and at least one organic listing, and where the highest relevancy is. Does that match what you guys have found as well?

Nick: I don’t think we’ve looked at it in the way of consideration sets, along those lines. I think that’s consistent with the outcomes that we’ve had and maybe some of the thought process that lead us to our outcome. The net effect is the same outcome. One of the things that we are careful about is trying to make sure that you don’t want to create an experience where you show no organic results on the page, you know, or at least above the fold on the page. You want to make sure that the user is going to be able to make that decision, regarding what they want to click on and if you just serve the user with one type of result you’re not really helping the user make that type of decision. What we care more about is what the user sees in the above the fold real estate, not quite so much the full result. And probably relatively consistent on certain sets of screen resolutions.

Gord: One of the things that Marissa said when I talked to her a few days ago was that as Google moves into Universal Search results and we’re starting to see different types of results appear on the page, including in some cases images or videos, that opens the door to potentially looking at different presentations of advertising content as well. How does that impact your quality scoring and ultimately how does that impact the user?

Nick: We need to see. I don’t think we know yet. Ultimately it would be our team deciding whether to do that or not, so fortunately we don’t have to worry too much about hooking up the quality score because we would design a quality score that would make sense for it. The team that focuses on what we call Ad UI, that’s the team that’s looking at …it’s sub group within that, that’s the team that essentially thinks about what should the ads actually look like?

Diane: And what information can we present that’s most useful to the user?

Nick: So in some cases, that information may be an image, in some cases that information may be a video. We need to make sure in doing this that we’re not just showing video ads, because video happens to be catchy. We want to make sure that we’re showing video ads because the video is what actually contains the content that’s actually useful for the user. With Universal Search we found that video search results, for example, can contain that information, so it’s likely that their paid results set could be the same as well. Again, just as in text ads, we’d need to make sure that whatever we do there is user driven rather than anything else and that the users are actually happy with it. There would be a lot of user experimentation that would happen before anything was launched along those lines.

Diane: You can track our blogs as well. All of our experiments show up at some point there.

Gord: Right. Talking a little bit about personalization, you started off by saying that Larry and Sergey have dictated that the ads should be more relevant than the organic results in an ideal situation and just as a point of interest, in our second eye tracking study, when we looked at the success rate of click throughs as far as people actually clicking through to a site that appeared to deliver what they were looking for, for commercial tasks, it was in fact the top sponsored ads that had the highest average success rate of all the links on the page. When we’re looking at Personalization, one of the things that, again, Marissa said is we don’t want our organic results and our sponsored results to be too far out of sync. Although personalization is rolling out on the organic side right now, it would make sense, if that can significantly improve the relevancy to the user, for that to eventually fold into the sponsored results as well. So again, that might be something that would potentially impact quality scoring in the future, right?

Nick: Yes. So we have been looking at some.. I’m not sure if the right word is personalization or some sort of user based or task based…what the right word is..changes to how we think about ads. We have made changes to try to get a sense of what the user’s trying to do right now. Whether they’re, for example, in a commercial mind set and alter how we do ads somewhat based on that type of an understanding of the user’s current task. We’ve done much less with trying to..we’ve done nothing really…with trying to build profiles of the user and trying to understand who the user is and whether the user is a man or woman or a 45 year old or a 25 year old. We haven’t seen that that’s particularly useful for us. You don’t want to personalize users into a corner, you don’t want to create a profile of them that’s not actually reflective of whom they are. We don’t want to freak the user out. If you have a qualified user you could risk alienating that user. So we’ve been very hesitant to move in that direction and in general, we think that there’s a lot more we can that doesn’t require profiles down that path.

Diane: You can think of personalization in a couple of different ways, right? It can manifest itself in regards to the results you actually show. It can also be more about how many ads or even the presentation of those ads with regards to actual information. Those sorts of things. There are many possible directions that can be more fruitful than, like Nick points out, profiling.

Gord: Right, right.

Nick: For example, one of the things that you could theoretically do is, as you know, we changed the background color of our top ads from blue to yellow, because we found that yellow works better in general. You might find that for certain users, green is better, you might find that for certain users, blue is actually better. Those types of things, where you’re able to change things based on what users are responding to, is more appealing to us than these broad user classification types of things. It seems somewhat sketchy.

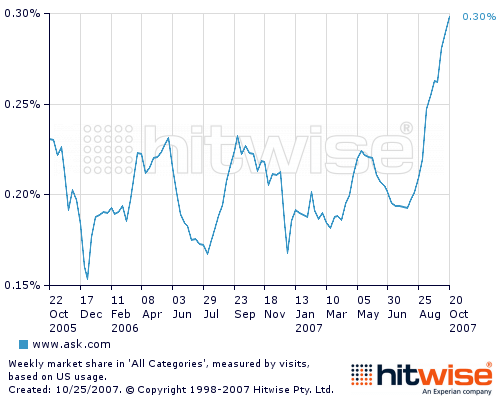

Gord: It was funny. Just before those interview, I was actually talking to Michael Ferguson at Ask.com and one of the things he mentioned that I thought was quite interesting was a different take on personalization. It may get to the point where it’s not just using personalization for the sake of disambiguating intent and improving relevancy, it might actually be using personalization to present results or advertising messages in the form that’s most preferred by the user. So some may prefer video ads. Some may prefer text ads and they may prefer shorter text ads or longer text ads. And I just thought that that was really interesting. Looking at personalization to actually customize how the results are being presented to you. In what format.

Nick: Yes.

Gord. One last question. You’ve talked before about quality scoring and how it impacts two different things. Whether it’s the minimum bid price or whether it’s actually position on the page. And the fact that there’s more factors, generally, in the “softer” or “fuzzier” minimum bid algorithm than there is in the “hard” algorithm that determines position on the page. And ideally you would like to see more factors included in all of it. Where is Google at on that line right now?

Nick: There are probably two things. One is that when setting the minimum bid, we have much less information available to us. We don’t know what the specific query is that the user issued. We don’t know what time of day it is. We know very little about the context of what the user is actually trying to do. We don’t know what property that user’s on. There’s a whole lot that we don’t know. What we need to do when we set a minimum bid is much coarser. We just need to be able to say, what do we think this keyword is, what do we think the quality of the ad is, does the keyword meet the objective of the landing page and make a judgment based on that. But we don’t have the ability to be more nuanced in terms of actually taking into account the context of how the ad is likely to actually show up. There’s always going to be a difference in terms of what we can actually use when we set the minimum bid versus what we use at auction time to set the position. The other piece of it though is there are certain pieces that only affect the minimum bid. Let me give you an example. Landing page quality normally impacts the minimum bid but it doesn’t impact your ranking. The reason for that is mostly from the standpoint of our decision to launch the product and what we thought was the most expedient way to improve the landing page quality of our ads rather than what we think will be the long term design of the system. So I’d expect things like that, where signals like landing page quality should impact not only the minimum CPC but also rank which ads show at the top of the page and things like that as well. That’s where you’ll see more convergence. But there’s always going to be context that we can get at query time to use for the auction than we can for minimum CPC.

In 2006 Wired Editor Chris Anderson released The Long Tail, and suddenly we were finding long tails in everything. The swoop of the power law distribution curve was burned on our consciousness, and search was no exception. Suddenly, the hot new strategy was to move into the long tail of search, those thousands of key phrases that individually may only bring a handful of visitors, but in aggregate, can bring more than the head phrases. At Enquiro, we were no exception. But lately, I haven’t heard as much about long tail campaigns. And I think it’s because our thinking was a little flawed.

In 2006 Wired Editor Chris Anderson released The Long Tail, and suddenly we were finding long tails in everything. The swoop of the power law distribution curve was burned on our consciousness, and search was no exception. Suddenly, the hot new strategy was to move into the long tail of search, those thousands of key phrases that individually may only bring a handful of visitors, but in aggregate, can bring more than the head phrases. At Enquiro, we were no exception. But lately, I haven’t heard as much about long tail campaigns. And I think it’s because our thinking was a little flawed.